It’s been a while since my last update, though not from lack of action, rather I’ve been struggling with my latest project for a the past few months and I felt it’s time to pull back the curtain a bit and show what I’ve been working on.

It’s been a while since my last update, though not from lack of action, rather I’ve been struggling with my latest project for a the past few months and I felt it’s time to pull back the curtain a bit and show what I’ve been working on.

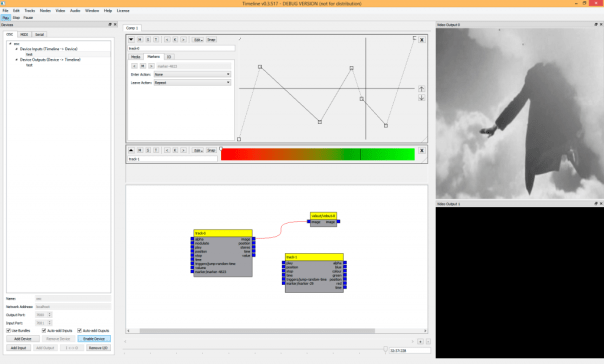

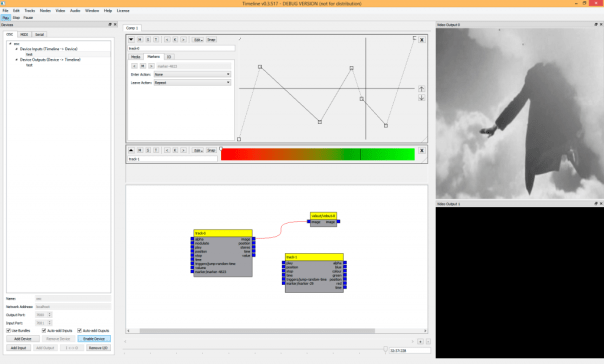

My original design for the Timeline software was a nice open-ended sequencer that could manipulate all manner of types of data from single values (for MIDI or OSC control of parameters) to colours, audio, and even video, combined with a flexible (possibly too flexible) control over how each track played back with repeating sections and random markers, and all manner of tricks that I was getting really excited about using.

I’d spent almost a year working on it and had a pretty nice media playback engine, and everything seemed to be heading towards a 1.0 release back in June 2014 but then I hit a wall, which I have to say is pretty rare for me in my software development experience as I’ve always had a clear idea about what the role and function of each system I’m developing has been.

The problem was the growing complexity of visually managing the relationship between the different tracks of data and how these related to other applications and devices through the various input and output interfaces. I was also toying with the idea of being able to apply real-time effects to video and audio (also data) and these did not comfortably fit into the design I had come up with.

I’ve also slowly been working on another application called PatchBox that uses a node based interface to visually build connections between blocks of functionality, so I took a deep breath and ripped the code apart and put in a new interface:

The node interface went some way towards solving the problem of presenting the relationship between tracks and devices, but there was a major problem, in that the core code for the node system (it’s actually the code that drives several of my art installations such as Shadows of Light) was rather incompatible with the core code of the Timeline application, and a hard decision had to be made:

The node interface went some way towards solving the problem of presenting the relationship between tracks and devices, but there was a major problem, in that the core code for the node system (it’s actually the code that drives several of my art installations such as Shadows of Light) was rather incompatible with the core code of the Timeline application, and a hard decision had to be made:

- Release Timeline and PatchBox separately and fix the interface issue over time.

- Combine the two applications, which would require taking a massive step back equivalent to months of development time.

Not an easy one to make, compounded by the fact that as a freelance artist, until I get a product on sale, I’m basically paying for all the development time out of my own pocket so the latter option was not to be taken lightly.

After a couple of weeks of chin stroking, frantic diagrams scratched in notebooks, thinking about what configuration would be most commercially viable, and false starts, I came to a final thought:

“Make the tool that you need for your art”

It’s not that I don’t want it to be a useful tool that other people will want to use and buy at some point (that would be lovely) but I’m not a software design company, and this is primarily an “art platform” for my own work so I have to listen to what feels right to me.

So, I chose the latter (of course) and I’ve been working on it at least a few hours a day, pretty much every day for the past few months. The screenshot at the top of this post is the latest showing a colour timeline track feeding into an OpenGL shader.

There is still much to be done and it’s pretty gruelling at times as I’m having to go over old ground repeatedly, but I feel like it’s heading in the right direction, and I’m already creating new artworks using it that wouldn’t have previously been possible.

Realistically a 1.0 release isn’t now going to happen until 2015, though with a long solo project like this it is easy to find yourself on the long slide into a quiet madness of complexity and introspection so I’m planning more regular updates to at least keep my progress in check by “real people”. To this end, if you have any comments, questions, or general messages of encouragement, I’d be happy to hear them.

My video mapping software Painting With Light has been living on it’s own website since it launched. I’ve been planning to incorporate it onto the main bigfug.com website for a while now, and you may have noticed that this process is now underway.

My video mapping software Painting With Light has been living on it’s own website since it launched. I’ve been planning to incorporate it onto the main bigfug.com website for a while now, and you may have noticed that this process is now underway.