It’s been a while since my last update, though not from lack of action, rather I’ve been struggling with my latest project for a the past few months and I felt it’s time to pull back the curtain a bit and show what I’ve been working on.

It’s been a while since my last update, though not from lack of action, rather I’ve been struggling with my latest project for a the past few months and I felt it’s time to pull back the curtain a bit and show what I’ve been working on.

My original design for the Timeline software was a nice open-ended sequencer that could manipulate all manner of types of data from single values (for MIDI or OSC control of parameters) to colours, audio, and even video, combined with a flexible (possibly too flexible) control over how each track played back with repeating sections and random markers, and all manner of tricks that I was getting really excited about using.

I’d spent almost a year working on it and had a pretty nice media playback engine, and everything seemed to be heading towards a 1.0 release back in June 2014 but then I hit a wall, which I have to say is pretty rare for me in my software development experience as I’ve always had a clear idea about what the role and function of each system I’m developing has been.

The problem was the growing complexity of visually managing the relationship between the different tracks of data and how these related to other applications and devices through the various input and output interfaces. I was also toying with the idea of being able to apply real-time effects to video and audio (also data) and these did not comfortably fit into the design I had come up with.

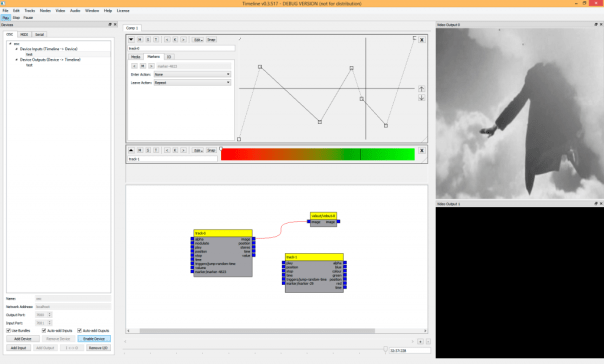

I’ve also slowly been working on another application called PatchBox that uses a node based interface to visually build connections between blocks of functionality, so I took a deep breath and ripped the code apart and put in a new interface:

The node interface went some way towards solving the problem of presenting the relationship between tracks and devices, but there was a major problem, in that the core code for the node system (it’s actually the code that drives several of my art installations such as Shadows of Light) was rather incompatible with the core code of the Timeline application, and a hard decision had to be made:

The node interface went some way towards solving the problem of presenting the relationship between tracks and devices, but there was a major problem, in that the core code for the node system (it’s actually the code that drives several of my art installations such as Shadows of Light) was rather incompatible with the core code of the Timeline application, and a hard decision had to be made:

- Release Timeline and PatchBox separately and fix the interface issue over time.

- Combine the two applications, which would require taking a massive step back equivalent to months of development time.

Not an easy one to make, compounded by the fact that as a freelance artist, until I get a product on sale, I’m basically paying for all the development time out of my own pocket so the latter option was not to be taken lightly.

After a couple of weeks of chin stroking, frantic diagrams scratched in notebooks, thinking about what configuration would be most commercially viable, and false starts, I came to a final thought:

“Make the tool that you need for your art”

It’s not that I don’t want it to be a useful tool that other people will want to use and buy at some point (that would be lovely) but I’m not a software design company, and this is primarily an “art platform” for my own work so I have to listen to what feels right to me.

So, I chose the latter (of course) and I’ve been working on it at least a few hours a day, pretty much every day for the past few months. The screenshot at the top of this post is the latest showing a colour timeline track feeding into an OpenGL shader.

There is still much to be done and it’s pretty gruelling at times as I’m having to go over old ground repeatedly, but I feel like it’s heading in the right direction, and I’m already creating new artworks using it that wouldn’t have previously been possible.

Realistically a 1.0 release isn’t now going to happen until 2015, though with a long solo project like this it is easy to find yourself on the long slide into a quiet madness of complexity and introspection so I’m planning more regular updates to at least keep my progress in check by “real people”. To this end, if you have any comments, questions, or general messages of encouragement, I’d be happy to hear them.

Love these thoughts. Relative to your own work and preferences (since that’s now your priority – an important decision that focuses things), can you describe the workflow you’re targetting with the various UI styles – node-based, timeline-based, text/code-based. Is there some underlying mechanism (e.g. a scripting language, or an engine with APIs) with which higher-level things (like node-based and timeline-based UIs) interact? For example, is the node-based UI a convenient alternative to coding the connections in text, or is the node-based UI the only interface to that functionality?

Thanks for the comment, Tim. The core focus of this project is fundamentally to reduce the amount of repetitive coding I end up doing to make my projects, and to address the issue of keeping these projects alive over many years, so I can show an artwork I made several years ago as easily as I can a recent one.

For instance, I’ve just ordered a Kinect 2, and with the system I’m working on, I can write the new plugin to interface it into the wider system, and “instantly” get a higher resolution “Shadows of Light”, as using a new API doesn’t affect the rest of the composition.

I think the basic node-based interface ‘adequately’ encapsulates the idea of how data moves through and is processed by a computer. It visually shows the relationship between each stage of the process, and ensures that data output from one pin is compatible with another input pin, so a PNG image loaded from disc can meaningfully be turned into an OpenGL texture, as can a JPEG image, but a floating point number can’t be automatically turned into a colour without further hint from the programmer, for instance.

While it is difficult for me to imagine what it might be like to learn programming these days (far more intimidating than when I started, I should think) it seems logical to me to break up the concepts of programming into blocks that can be logically linked together. Programming is a case of learning how computers process data, and having a logical set of processing tools at your disposal. It’s easy to get bogged down in the specifics of code, rather than the results. If you want to get the camera image from a Kinect, you need to write a bunch of related initialisation code, link to libraries, include headers, etc – this ephemera doesn’t directly solve the original “get image from camera” requirement (though it is obviously required) and isn’t logical to a less experienced coder.

There is still low-level coding available within the system. I use OpenGL shaders liberally in my work, and there is a JavaScript interface, so it’s not designed to be entirely GUI based, rather I’m concerned with writing code only where I need to, and making the entire system run under an emulator if needs be for future presentation, so I use popular open-source libraries, and well documented API’s and flexible operating systems.

The timeline aspect of the software is an important one for me. Many node based systems are concerned with creating a live system that is often interactive, but it’s a common requirement to reliably control parameters over time, and for this you need some kind of sequencer. While it would be possible to use existing additional MIDI/OSC sequencing applications to provide this control, it suits me to have this functionality incorporated.

You may ask, as I often have, why don’t I just use one or more of the very excellent tools already out there on the market. Their code is much more mature, as are their interfaces, and the cost of the tools is tiny compared to the amount of time that is required to develop a new application. Mainly the reason is one of control – I own the source code, can steer it entirely in any direction of my own choosing, and make sure it is supported as long as I need it, which I truly hope will be for decades to come. This might seem a grand claim when most software goes through major upgrades every few years, but this is my aim.

Not sure I entirely covered all of your questions but hope that explains things a little more.

http://tooll.io/ is an interesting project to check out for ideas.

http://nodebox.net/node/ is another one. I thought Nodebox 2 was very cool, I see they’ve advanced to Nodebox 3 – I haven’t checked it out to see whether 3 is as interesting as 2.

> I think the basic node-based interface ‘adequately’ encapsulates the idea of how data moves through and is processed by a computer.

The challenge with node-based interfaces is deciding what level the nodes should operate at. I agree that it’s a good interface for routing, but it’s easy and tempting for such systems to incorporate nodes that operate at a lower level (logic operations) that, while certainly useful for occasional things, turn into spaghetti patches that seem like they would be much more straightforward and scaleable to do in a text-based form. Obviously, the target customer for a programmable interface makes a huge difference, which is why your decision to target yourself (and people who are similar to you) as a primary driver is absolutely key.

Making a timeline tool is an interesting example. Sometimes being able to draw a curve in a timeline is the best way of doing something, and sometimes code-based things are part of the timeline, where a single instance of code might control/span the entire timeline. Many years ago (1998, apparently) I added the ability inside Keykit’s sequencer tool (the Group tool) to embed tool instances within an individual track. It actually worked surprisingly well – relying on the fact that “tools” within Keykit have a very standardized object interface for both display and set/get/play functionality. It *did* get a little hairy, implementation-wise, and I didn’t pursue it further, but it definitely showed me the extreme leverage value of providing complete object interfaces (covering both display as well as realtime operation) that allow one tool/object to be completely wrapped in another tool. I.e. the timeline tool itself can be an object with the same inteface as other objects, allowing itself to be contained in other objects. For my description of this, in the context of Keykit, see this mailing-list announcement from 1998:

http://nosuch.com/keykit/mailarchive/msg01173.html

and look for the section at the end that starts “And finally, the ability to instantiate tools *within* tracks of a Group.”

To give a specific example, let’s take the ability to draw a curve in a timeline tool. In a typical timeline, the curve-drawing would be an inherent part of the timeline tool (and its UI). Another approach is the “tool within a tool” approach. In that alternate approach, there is a separate tool which encapsulates the “drawing a curve” feature – which includes both the GUI interface as well as the data which the curve outputs – as a separate tool that you can then embed at an arbitrary point within the timeline. The embedded tool within the timeline has a “span” – letting you scale the duration of the curve within a track of the timeline, independent of what shape the curve is. The timeline forwards all GUI things (both input and output) to the embedded tool – all it knows is that there’s some other tool which covers a particular region of the timeline (with parameters). This would then apply to LFOs and other “single-valued-data-channel” type of things as well. The main difference here is that the encapsulated “single-valued-data-channel” object includes both the generation/data-manipulation behavior AND the GUI interface to it, so that it can be embedded within other tools that know nothing about it other than the area within the timeline that is used to display it and trigger it’s operation when playing back.

Using an interpreted language makes the creation and distribution of such tools much easier. I think the Nodebox project is definitely something to look at, in terms of the kinds of interfaces to a tool/node that make it embeddable. For example this page on how to write your own node in Nodebox: http://nodebox.net/node/documentation/advanced/programming-nodes.html

This space intentionally blank.

There are so many similar systems around these days (also Vuo and Magic), it’s interesting to see how fresh approaches compare to the older generation of Isadora/vvvv/Max/etc. Magic looks nice, and I found Vuo quite interesting as they’re obviously concerned with longevity of use, too. I believe they’re putting the source code into some kind of Escrow so that if the author disappears or stops developing it, the code gets released.

I quite agree that such a system shouldn’t just rely on low-level nodes like number addition/subtraction to provide that functionality. It’s going to be slow and visually messy but I think they have their place both for learners, and when used sparingly when visual clarity is more important than hiding functionality away in a hidden code box.

What I’m aiming for in my system is having just enough structure to offer me fast access to a wide range of disparate technologies that I can connect together with the absolute minimum of “glue code”. I’m not trying to abstract away shaders or sensors to the point that they’re so high level and unrecognisable, so you still need to learn that stuff, but I see that as a good thing – that knowledge is transferable to other situations.

Also, regarding your tales of tool abstraction – again, a difficult balance of functionality and flexibility. Obviously it’s possible to abstract everything to an extreme degree, if you don’t mind the performance hit and other problems. I am building this thing in a modular way, so there is an API and it’s all abstracted out so writing your own plugins and timeline controls is all possible. This of course makes little sense at all when you’re the only one writing the plugins (although it’s quite useful to separate out dependencies) and I’m keeping an eye on myself to make sure I’m not using such techniques just because I can.

I’m trying not to replicate the mistakes I made with a previous software application I wrote called PatchBox which was a precursor to this one, and was crazy powerful but had such a bad user interface and was so monolithic with so many technologies, that I could never have hoped to release it without spending a long, long time just writing documentation. I used it for many installations and spent years developing it, but I would have to spend days (maybe weeks) just trying to get it to compile now.

The problem is one of incompatible aims. As an artist, I want a digital platform that is modern so I can use all the latest cool stuff, provides a fast, standardised interface that hides all the implementation code and just lets me focus on manifesting my ideas, and will run things I did years ago with little or no updating other than replacing a “Kinect V1” node with a “Kinect V2” node, for instance.

Of course this isn’t just down to my code. I need the API’s that I use to remain compatible (I’m using Qt 5, OpenGL, Boost, etc), and the underlying operating system, drivers, and hardware to all work with me to achieve this aim, and that’s a big problem because hardware and software manufacturers don’t earn more money if people don’t upgrade. We’ve seen that left to their own choice, users will stay with Windows XP even if Windows 8 is available because it doesn’t actually make any difference to their work. At the other end of the scale, the mobile phone market has always been about having the latest technology from day one, so there’s a massive churn of hardware and software. Apple are doing this with their products, albeit at a slightly slower rate, and the fact that it’s so difficult to emulate their operating system is a big turn off for me from the perspective of preserving the function of my work.

The aims of business are not entirely compatible with the aims of artists, and perhaps this is where part of my development conflict is coming from. Maybe I won’t earn a penny from selling this software because of it, but you can be sure I’m going to make a shed load of new art using it (and make money that way), and that’s the most important thing to me.